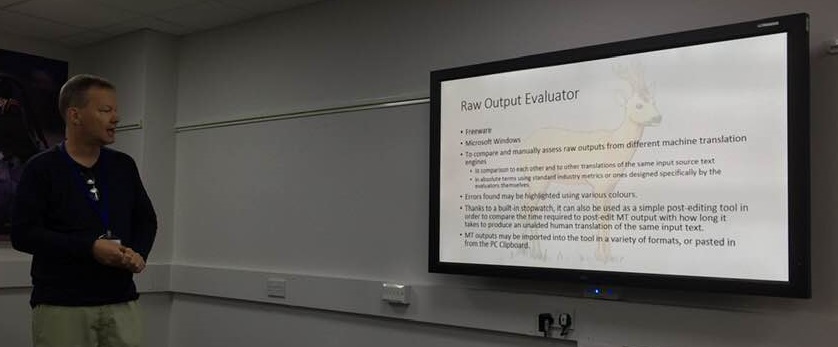

A new software tool from the developer of IntelliWebSearch: Raw Output Evaluator

Raw Output Evaluator, developed for a postgraduate course module aimed at teaching the use of machine translation and post-editing at the IULM University in Milan, was officially launched to the public in a workshop at Translating and the Computer 40 in London on 15 November.

The tool was primarily designed to allow students to compare the raw outputs from different machine translation engines, both to each other and to other translations of the same source text, and carry out comparative human quality assessment using standard industry metrics. The same program can also be used as a simple post-editing tool and to compare the time required to post-edit MT output with how long it takes to produce an unaided human translation thanks to a built-in timer.

During the workshop Raw Output Evaluator aroused the interest of university lecturers and researchers, but also translation service providers looking for ways of measuring the quality of MT output. During the Q&A session, attendees requested three new features (optional randomization of outputs so that evaluators are not able to identify the MT engines used, the possibility to export scores for external processing, and control over the punctuation marks used for segmentation), all of which were promptly implemented in the update released today.

A full paper was also published in the conference proceedings.